How is color processing performed on my camera’s images?

Last Revision Date: 6/5/2014

This article explains how color images are achieved with color sensors that use the Bayer Tile Pattern.

Some cameras convert the raw Bayer tiled images produced by the sensor into color on board the camera. For all of our cameras, color processing can be performed by software on the PC in order to reduce bandwidth and allow users the flexibility to implement their own algorithms. To determine if your camera performs on-board color processing, refer to the Technical Reference Manual for your camera onthe downloads site.

In color versions of our cameras, varying intensities of light are measured as a rectangular grid. To construct a color image, a Color Filter Array (CFA) must be placed between the lens and the CCD sensors. A CFA typically has one color filter element for each sensor. In this arrangement each pixel in the detector samples the intensity of just one of the many-color separations.

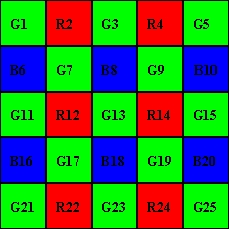

The recovery of full-color images from a CFA-based detector requires a method of calculating values of the other color separations at each pixel. The most popular method is the Bayer Tile Pattern, which uses the three additive primary colors, red, green and blue (RGB), for the filter elements. All color sensors in our cameras work with use the Bayer Tile Pattern. This pattern is basically a series of band pass filters that only allow certain colors (bands) of light through. The tiling is made up of red filters, green filters and blue filters. Thus each pixel in the image is red, green or blue (see image below).

This image is an example only and does not represent the actual Bayer Tile Mapping of your camera.

The three images (from the three different color channels) are then combined and finally run through a demosaicing algorithm that combines the color values of a pixel and its eight neighbors to create a full 24-bit color value for that pixel.